Evaluation

Module: cutoop.eval_utils.

This module contains gadgets used to evaluate pose estimation models.

The core structure of evaluation is the dataclass DetectMatch , inheriting from the dataclass GroundTruth . In other words, it contains all information required for metrics computation.

Evaluation Data Structure

Prediction and its matched ground truth data provided for metric computation.

Containing 6D pose (rotation, translation, scale) information for ground truth and predictions, as well as ground truth symmetry labels.

Camera intrinsics.

Confidence output by the detection model (N,).

Ground truth 3D affine transformation for each instance (Nx4x4), without scaling .

Ground truth class label of each object.

The number of objects annotated in GT ( NOT the number of detected objects).

Ground truth bounding box side lengths (Nx3), a.k.a. bbox_side_len in image_meta.ImageMetaData .

Ground truth symmetry labels (N,) (each element is a rotation.SymLabel ).

>>> list(map(str, result.gt_sym_labels))

['y-cone', 'x-flip', 'y-cone', 'y-cone', 'none', 'none', 'none', 'none', 'x-flip']

Path to the rgb image (for drawing).

Prediction of 3D affine transformation for each instance (Nx4x4).

Prediction of 3D bounding box sizes (Nx3).

Use slice or integer index to get a subset sequence of data.

Note that gt_n_objects would be lost.

>>> result[1:3]

DetectMatch(gt_affine=array([[[ 0.50556856, 0.06278258, 0.86049914, -0.2933575 ],

[-0.57130486, -0.72301346, 0.3884099 , 0.5845589 ],

[ 0.6465379 , -0.68797517, -0.3296649 , 1.7387577 ],

[ 0. , 0. , 0. , 1. ]],

[[ 0.38624182, 0.00722773, -0.92236924, 0.02853431],

[ 0.6271931 , -0.735285 , 0.25687516, 0.6061586 ],

[-0.6763476 , -0.67771953, -0.28853098, 1.6325369 ],

[ 0. , 0. , 0. , 1. ]]],

dtype=float32), gt_size=array([[0.34429058, 0.00904833, 0.19874577],

[0.32725419, 0.1347834 , 0.32831856]]), gt_sym_labels=array([SymLabel(any=False, x='half', y='none', z='none'),

SymLabel(any=False, x='none', y='any', z='none')], dtype=object), gt_class_labels=array([48, 9]), pred_affine=array([[[ 0.50556856, 0.06278258, 0.86049914, -0.2933575 ],

[-0.57130486, -0.72301346, 0.3884099 , 0.5845589 ],

[ 0.6465379 , -0.68797517, -0.3296649 , 1.7387577 ],

[ 0. , 0. , 0. , 1. ]],

[[ 0.38624182, 0.00722773, -0.92236924, 0.02853431],

[ 0.6271931 , -0.735285 , 0.25687516, 0.6061586 ],

[-0.6763476 , -0.67771953, -0.28853098, 1.6325369 ],

[ 0. , 0. , 0. , 1. ]]],

dtype=float32), pred_size=array([[0.3535623 , 0.00863385, 0.21614327],

[0.31499929, 0.1664661 , 0.41965601]]), image_path=['../../misc/sample/0000_color.png', '../../misc/sample/0000_color.png'], camera_intrinsics=[CameraIntrinsicsBase(fx=1075.41, fy=1075.69, cx=631.948, cy=513.074, width=1280, height=1024), CameraIntrinsicsBase(fx=1075.41, fy=1075.69, cx=631.948, cy=513.074, width=1280, height=1024)], detect_scores=None, gt_n_objects=None)

Calibrate the rotation of pose prediction according to gt symmetry labels using rotation.rot_canonical_sym() .

Parameters: silent – enable this flag to hide the tqdm progress bar.

Note

This function does not modify the value in-place. Instead, it produces a new calibrated result.

Returns: a new DetectMatch .

Concatenate multiple result into a single one.

Note that the detect_scores ( gt_n_objects )

should either be all None or all ndarray ( int ).

Returns: a DetectMatch combining all items.

Compute IoUs, rotation differences and translation shifts. It is useful if you need to compute other custom metrics based on them.

When setting computeIOU to False , it returns an numpy array of zeros.

Parameters: use_precise_rot_error – Use analytic method for rotation error calculation instead of discrete method (enumeration). The results should be a little smaller.

Returns: (ious, theta_degree, shift_cm), where

- ious: (N,).

- theta_degree: (N,), unit is degree.

- shift_cm: (N,), unit is cm.

>>> iou, deg1, sht = result.criterion()

>>> iou, deg2, sht = result.criterion(use_precise_rot_error=True)

>>> assert np.abs(deg1 - deg2).max() < 0.05

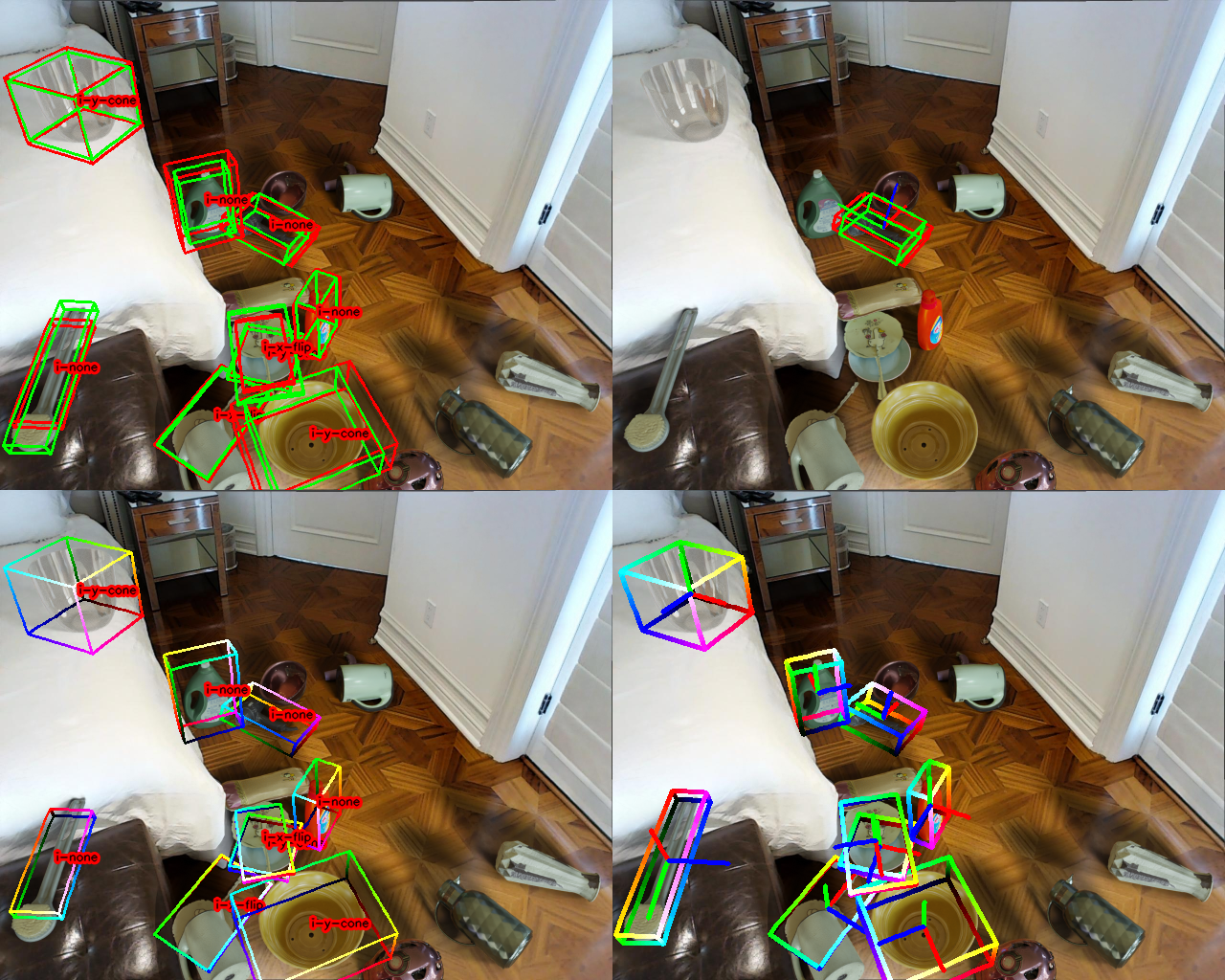

Draw bbox of gt and prediction on the image. Require image_path and camera_intrinsics to be set.

Parameters:

- path – output path for rendered image

- index – which prediction to draw; set default value None to draw everything on the same image.

- image_root – root directory of the image, to which assuming image_path stores relative path.

- draw_gt – whether to draw gt bbox.

- draw_pred – whether to draw predicted bbox.

- draw_label – whether to draw symmetry label on the object

- draw_pred_axes_length – specify a number to indicate the length of axes of the predicted pose.

- draw_gt_axes_length – specify a number to indicate the length of axes of the gt pose.

- thickness – specify line thickness.

>>> result.draw_image(

... path='source/_static/gr_1.png'

... ) # A

>>> result.draw_image(

... path='source/_static/gr_2.png',

... index=4,

... draw_label=False,

... draw_pred_axes_length=0.5,

... ) # B

>>> result.draw_image(

... path='source/_static/gr_3.png',

... draw_gt=False,

... ) # C

>>> result.draw_image(

... path='source/_static/gr_4.png',

... draw_pred=False,

... draw_label=False,

... draw_gt_axes_length=0.3,

... thickness=2,

... ) # D

- A(left top): Draw all boxes.

- B(right top): Draw one object.

- C(left bottom): Draw predictions.

- D(right bottom): Draw GT with poses.

Construct matching result from the output of a detection model.

Construct matching result from GT (i. e. use GT detection).

Compute several pose estimation metrices.

Parameters:

- iou_thresholds – threshold list for computing IoU acc. and mAP.

- pose_thresholds – rotation-translation threshold list for computing pose acc. and mAP.

- iou_auc_ranges – list of ranges to compute IoU AUC.

- pose_auc_range – degree range and shift range.

- auc_keep_curve – enable this flag to output curve points for drawing.

- criterion – since the computation of IoU is slow, you may cache the result of cutoop.eval_utils.DetectMatch.criterion() and provide it here, in exactly the same format.

- use_precise_rot_error – See eval_utils.DetectMatch.criterion() .

Returns: the returned format can be formalized as

- 3D IoU:

- average (mIoU): per-class average IoU and mean average IoU.

- accuracy: pre-class IoU accuracy and mean accuracy over a list of thresholds.

- accuracy AUC: normalised AUC of the IoU’s accuracy-thresholds curve.

- average precision (detected mask, if providing pred_score): per-class IoU average precision and mean average precision, using detection confidence (mask score) as recall.

- Pose:

- average: per-class average rotation and translation error.

- accuracy: pre-class degree-shift accuracy and mean accuracy over a list of thresholds.

- accuracy AUC: normalised AUC of the rotation’s, translation’s and pose’s accuracy-thresholds curve. For pose, AUC is generalised to “volume under surface”.

- accuracy AUC: AUC of the IoU accuracy-thresholds curve.

- average precision (detected mask, if providing pred_score): per-class degree-shift average precision and mean average precision over a list of thresholds, using detection confidence (mask score) as recall.

Camera intrinsics.

Confidence output by the detection model (N,).

Ground truth 3D affine transformation for each instance (Nx4x4), without scaling .

Ground truth class label of each object.

The number of objects annotated in GT ( NOT the number of detected objects).

Ground truth bounding box side lengths (Nx3), a.k.a. bbox_side_len in image_meta.ImageMetaData .

Ground truth symmetry labels (N,) (each element is a rotation.SymLabel ).

>>> list(map(str, result.gt_sym_labels))

['y-cone', 'x-flip', 'y-cone', 'y-cone', 'none', 'none', 'none', 'none', 'x-flip']

Path to the rgb image (for drawing).

Concatenate multiple result into a single one.

Note that the detect_scores ( gt_n_objects ) should either be all None or all ndarray ( int ).

Returns: a DetectOutput combining all items.

This dataclass is a subset of Detectmatch , which can be constructed

directly from GT image data before running the inference process.

Camera intrinsics.

Ground truth 3D affine transformation for each instance (Nx4x4), without scaling .

Ground truth class label of each object.

Ground truth bounding box side lengths (Nx3), a.k.a. bbox_side_len in image_meta.ImageMetaData .

Ground truth symmetry labels (N,) (each element is a rotation.SymLabel ).

>>> list(map(str, result.gt_sym_labels))

['y-cone', 'x-flip', 'y-cone', 'y-cone', 'none', 'none', 'none', 'none', 'x-flip']

Path to the rgb image (for drawing).

Concatenate multiple result into a single one.

Returns: a GroundTruth combining all items.

Metrics Data Structure

Bases: object

See DetectMatch.metrics() .

The mean metrics of all occurred classes.

mapping from class label to its metrics.

Write metrics to text file as JSON format.

Parameters: mkdir – enable this flag to automatically create parent directory.

Load metrics data from json file

Bases: object

Rotation (towards 0) AUC.

Average rotation error (unit: degree).

IoU accuracies over the list of thresholds.

IoU average precision over the list of thresholds.

IoU (towards 1) AUC over a list of ranges.

Average IoU.

Pose accuracies over the list of rotation-translation thresholds.

Pose error average precision.

Pose error (both towards 0) VUS over a list of ranges.

Translation (towards 0) AUC.

Average tralsnation error (unit: cm).

Bases: object

The normalised (divided by the range of thresholds) AUC (or volume under surface) value.

If preserved, this field contains the x coordinates of the curve.

If preserved, this field contains the y coordinates of the curve.

Functions

Find a maximum matching of a bipart graph match with approximately maximum weights using linear_sum_assignment .

Parameters:

- weights_NxM – weight array of the bipart graph

- min_weight – (int): minimum valid weight of matched edges

Returns: (l_matches, r_matches)

- l_match: array of length N containing matching index in r, -1 for not matched

- r_match: array of length M containing matching index in l, -1 for not matched

Calculate average precision (AP).

The detailed algorithm is

- Sort

pred_indicator_Mbypred_scores_Min decreasing order, and compute precisions asNote

According to this issue , when encountering equal scores for different indicator values, the order of them affect the final result of AP. To eliminate multiple possible outputs, we add a second sorting key — sort indicator value from high to low when they possess equal scores.

- Compute recalls by ( )

- Suffix maximize the precisions:

- Compute average precision ( ref )

Parameters:

Parameters:

- pred_indicator_M – A 1D 0-1 array, 0 for false positive, 1 for true positive.

- pred_scores_M – Confidence (e.g. IoU) of the prediction, between 0, 1 .

- N_gt – Number of ground truth instances. If not provided, it is set to M.

Returns: the AP value. If M == 0, return NaN.

>>> inds = np.array([1, 0, 1, 0, 1, 0, 0])

>>> scores = np.array([1, 1, 1, 1, 1, 1, 1])

>>> float(cutoop.eval_utils.compute_average_precision(inds, scores))

0.4285714328289032

Finds matches between prediction and ground truth instances. Two matched objects must belong to the same class. Under this restriction, the match with higher IoU is of greater chance to be selected.

Scores are used to sort predictions.

Returns: (gt_match, pred_match, overlaps)

- gt_match: 1-D array. For each GT mask it has the index of the matched predicted mask, otherwise it is marked as -1.

- pred_match: 1-D array. For each predicted mask, it has the index of the matched GT mask, otherwise it is marked as -1.

- overlaps: M, N IoU overlaps (negative values indicate distinct classes).

Computes IoU overlaps between each pair of two sets of masks.

masks1, masks2: Height, Width, instances

Masks can be float arrays, the value > 0.5 is considered True, otherwise False.

Returns: N x M ious

Separate the original sequence into several subsequences, of which each belongs to a unique class label. The corresponding class label is also returned.

Returns:

a tuple (*sequences, labels) where

sequencesis a list of lists of ndarrays, where is the number ofvalues, is the number of occurred classes.labelsis a ndarray of length .

>>> from cutoop.eval_utils import group_by_class

>>> group_by_class(np.array([0, 1, 1, 1, 1, 0, 1]), np.array([0, 1, 4, 2, 8, 5, 7]))

(array([0, 1]), [array([0, 5]), array([1, 4, 2, 8, 7])])